Introduction 평화롭던 어느날… sudo reboot 이후 home server의 network에 큰 이상이 생겼다…

netplan을 통해 위 문제들을 해결할 수 있겠지만 기존에도 Kubernetes의 version up을 고려하고 있었기에 kubeadm 1.30.3을 통해 설치와 setup을 진행해보자!

Reference: Kubernetes v1.30: Uwubernetes (UwU ♥️)

하지만 기존의 Kubernetes version인 1.22.19 는 CRI (Container Runtime Interface)로 Docker를 지원하지 않는 큰 차이점이 존재한다.Kubernetes의 공식 문서 에 따르면 1.24 release 부터 Dockershim (Kubernetes 환경에서 Docker와의 호완성을 위해 사용된 compliant layer )을 제거했다.

CRI 표준 준수

Kubernetes는 다양한 container runtime과의 호환성을 위해 CRI라는 표준을 도입

Docker는 이 CRI 표준을 직접 지원하지 않기 때문에, Kubernetes는 Docker와의 호환성을 유지하기 위해 Dockershim이라는 별도 코드 유지

Kubernetes codebase 내에 추가적인 복잡성을 유발하고, Docker의 비표준 방식과의 호환성을 계속 보장해야 했기 때문에 maintainer에게 큰 부담

새로운 기능과의 호환성 부족

Dockershim은 cgroups v2, user namespaces 등의 새로운 Linux kernel 기능들과의 호환성에서 한계 존재

이러한 기능들은 최신 CRI에서 적극적으로 지원되며, 보안성 및 성능 향상

Community의 전환

Kubernetes community는 Docker 외의 다양한 container runtime으로 전환을 권장하고 있으며, 이는 Kubernetes의 유연성을 높이는 데 기여

Containerd, CRI-O와 같은 runtime이 CRI를 완벽히 지원하며, Dockershim의 역할 대체

결과적으로, Kubernetes는 Docker와의 호환성을 유지하기 위한 임시 방편으로서의 Dockershim을 제거하고, CRI를 지원하는 표준 런타임을 사용함으로써 장기적인 발전과 유지 관리의 효율성을 추구하게 되었다.

자! 그럼 Uwubernetes Kubernetes 1.30.3 설치 및 setup을 시작해보자!

Installation 사용된 기기의 spec은 Home Server 구축기 에서 확인할 수 있으며 OS는 Ubuntu Server 24.04 LTS 를 사용했다.

1 2 3 4 5 6 $ cat /etc/os-releasePRETTY_NAME="Ubuntu 24.04 LTS" NAME="Ubuntu" VERSION_ID="24.04" VERSION="24.04 LTS (Noble Numbat)" ...

아래의 관련 code들은 GitHub: Zerohertz/k8s-on-premise - v1.30.3-4.Argo-CD 에서 확인할 수 있다.

Setup 시작에 앞서 기기의 swap 기능을 중지하고 필요한 의존성을 설치 후 사용될 port들에 대해 방화벽 (UFW, Uncomplicated Firewall )을 해제한다.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 $ sudo swapoff -a$ sudo sed -i '/swap.img/s/^\(.*\)$/#\1/g' /etc/fstab$ free -h total used free shared buff/cache available Mem: 27Gi 942Mi 20Gi 1.3Mi 6.2Gi 26Gi Swap: 0B 0B 0B $ sudo apt-get update$ sudo apt-get install -y apt-transport-https ca-certificates curl gpg$ sudo ufw allow in 6443/tcp $ sudo ufw allow in 2379/tcp $ sudo ufw allow in 2380/tcp $ sudo ufw allow in 10257/tcp $ sudo ufw allow in 10259/tcp $ sudo ufw allow in 10250/tcp $ sudo ufw allow in 10249/tcp $ sudo ufw allow in 4789/udp $ sudo ufw allow in 5473/tcp

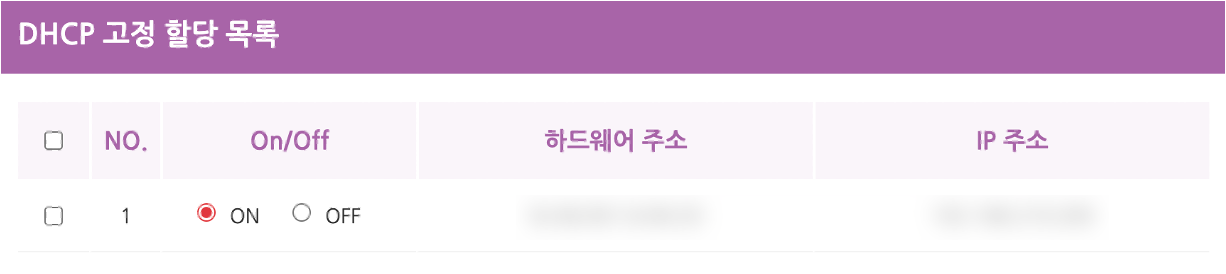

각 port의 사용처는 아래와 같으며 이전 글과 다르게 CNI (Container Network Interface)로 Flannel 대신 Calico 를 사용했다.

Port

Protocol

Service

Description

6443

TCP

Kubernetes API Server

Default HTTPS port for communication with the Kubernetes cluster.

2379

TCP

etcd Client

Default port for etcd client communication.

2380

TCP

etcd Peer

Default port for etcd peer-to-peer communication.

10257

TCP

kube-controller-manager

Health check HTTPS port for kube-controller-manager.

10259

TCP

kube-scheduler

Health check HTTPS port for kube-scheduler.

10250

TCP

Kubelet

HTTPS port used by Kubelet for metrics and control.

10249

TCP

Kube Proxy

Port used by Kube Proxy for managing inter-node network traffic.

4789

UDP

VXLAN (Calico)

Port used by Calico in VXLAN mode for inter-node communication.

5473

TCP

Calico Typha communication

Port used for communication between Calico Typha server and agents.

Installing containerd 앞서 설명한 것과 같이 Docker를 CRI로 사용할 수 없기 때문에 Containerd 를 설치한다.

1 2 3 4 5 6 7 8 9 10 $ sudo install -m 0755 -d /etc/apt/keyrings$ sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc$ sudo chmod a+r /etc/apt/keyrings/docker.asc$ echo \ "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \ $(. /etc/os-release && echo "$VERSION_CODENAME " ) stable" | \ sudo tee /etc/apt/sources.list.d/docker.list > /dev/null $ sudo apt-get update$ sudo apt-get install -y docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin$ sudo containerd config default | sudo tee /etc/containerd/config.toml

아래 과정은 위 script의 마지막 줄을 실행한 뒤 아래 file을 수정한다.

/etc/containerd/config.toml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 ... [plugins] ... [plugins."io.containerd.grpc.v1.cri"] ... [plugins."io.containerd.grpc.v1.cri".containerd] ... [plugins."io.containerd.grpc.v1.cri".containerd.runtimes] [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc] ... [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options] ... SystemdCgroup = true ...

1 2 $ sudo systemctl daemon-reload$ sudo systemctl restart containerd

Container Runtimes CNI 사용을 위한 plugin을 설치하고 network 설정을 진행한다.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 $ wget https://github.com/containernetworking/plugins/releases/download/v1.5.1/cni-plugins-linux-amd64-v1.5.1.tgz $ mkdir -p /opt/cni/bin$ sudo tar Cxzvf /opt/cni/bin cni-plugins-linux-amd64-v1.5.1.tgz$ cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf overlay br_netfilter EOF $ sudo modprobe overlay $ sudo modprobe br_netfilter $ cat <<EOF | sudo tee /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-ip6tables = 1 net.ipv4.ip_forward = 1 EOF $ sudo sysctl --system* Applying /usr/lib/sysctl.d/10-apparmor.conf ... ... net.ipv4.ip_forward = 1 $ sysctl net.ipv4.ip_forward net.ipv4.ip_forward = 1

Installing kubeadm 이제 거의 다 왔다…!kubectl을 함께 설치한다.

1 2 3 4 5 6 7 8 9 $ curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.30/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg $ echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.listdeb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.30/deb/ / $ sudo apt-get update$ sudo apt-get install -y kubelet kubeadm kubectl$ sudo apt-mark hold kubelet kubeadm kubectl$ sudo systemctl enable --now kubelet$ kubeadm version kubeadm version: &version.Info{Major:"1", Minor:"30", GitVersion:"v1.30.3", GitCommit:"6fc0a69044f1ac4c13841ec4391224a2df241460", GitTreeState:"clean", BuildDate:"2024-07-16T23:53:15Z", GoVersion:"go1.22.5", Compiler:"gc", Platform:"linux/amd64"}

Kubeadm을 통해 Kubernetes를 설정하면 crictl로 현재 상태를 확인할 수 있는데 아래와 같이 설정을 통해 warning message를 해결할 수 있다. (kubectl을 통해 조회할 수 있지만 kube-apiserver가 올바르게 작동하지 않는다면 유용)

/etc/crictl.yaml 1 2 runtime-endpoint: unix:///run/containerd/containerd.sock image-endpoint: unix:///run/containerd/containerd.sock

Create a cluster with kubeadm Calico를 CNI로 사용하기 때문에 pod-network-cidr를 192.168.0.0/16으로 설정하고 단일 node cluster이기 때문에 control-plane taint를 untaint한다.

1 2 3 4 5 6 7 8 9 $ sudo kubeadm init --pod-network-cidr=192.168.0.0/16... Your Kubernetes control-plane has initialized successfully! ... $ mkdir -p $HOME /.kube$ sudo cp -i /etc/kubernetes/admin.conf $HOME /.kube/config$ sudo chown $(id -u):$(id -g) $HOME /.kube/config$ kubectl taint nodes --all node-role.kubernetes.io/control-plane- node/0hz-controlplane untainted

CNI setup (Calico) kubectl로 현재 cluster의 정상 작동을 확인할 수 있다면 CNI를 아래와 같이 설정한다.

1 2 3 4 5 6 7 $ kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.1/manifests/tigera-operator.yaml namespace/tigera-operator created ... deployment.apps/tigera-operator created $ kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.1/manifests/custom-resources.yaml installation.operator.tigera.io/default created apiserver.operator.tigera.io/default created

K9s 간단히 K9s도 빠른 log 조회를 위해 설치했다.

1 2 3 4 5 $ wget https://github.com/derailed/k9s/releases/download/v0.32.5/k9s_Linux_amd64.tar.gz $ tar -zxvf ./k9s_Linux_amd64.tar.gz $ mkdir -p ~/.local/bin$ mv ./k9s ~/.local/bin && chmod +x ~/.local/bin/k9s$ rm ./k9s_Linux_amd64.tar.gz LICENSE README.md

Services 번외로 현재 node에 Helm 으로 배포된 service들의 설정을 공유한다.Ingress 로는 Traefik 을 사용했다.

Argo CD values.yaml 1 2 3 4 5 6 7 configs: params: server.insecure: true secret: argocdServerAdminPassword: "${PASSWORD}"

setup.yaml

setup.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: airflow-storage provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer --- apiVersion: v1 kind: PersistentVolume metadata: name: airflow-local-dags-pv labels: type: airflow-dags finalizers: - kubernetes.io/pv-protection spec: storageClassName: airflow-storage capacity: storage: 10Gi accessModes: - ReadOnlyMany hostPath: path: "" persistentVolumeReclaimPolicy: Retain --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: airflow-local-dags-pvc namespace: airflow annotations: {} spec: storageClassName: airflow-storage accessModes: - ReadOnlyMany resources: requests: storage: 10Gi selector: matchLabels: type: airflow-dags --- apiVersion: v1 kind: PersistentVolume metadata: name: airflow-local-logs-pv labels: type: airflow-logs spec: storageClassName: airflow-storage capacity: storage: 10Gi accessModes: - ReadWriteMany hostPath: path: "" persistentVolumeReclaimPolicy: Retain --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: airflow-local-logs-pvc namespace: airflow annotations: {} spec: storageClassName: airflow-storage accessModes: - ReadWriteMany resources: requests: storage: 10Gi selector: matchLabels: type: airflow-logs --- apiVersion: v1 kind: PersistentVolume metadata: name: airflow-stock-pv labels: type: airflow-stock spec: storageClassName: airflow-storage capacity: storage: 10Gi accessModes: - ReadOnlyMany hostPath: path: "" --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: airflow-stock-pvc namespace: airflow spec: storageClassName: airflow-storage accessModes: - ReadOnlyMany resources: requests: storage: 10Gi selector: matchLabels: type: airflow-stock --- apiVersion: traefik.io/v1alpha1 kind: Middleware metadata: name: airflow-forward-auth-mw namespace: airflow spec: forwardAuth: address: http://forward-auth.oauth.svc.cluster.local:4181 trustForwardHeader: true authResponseHeaders: - X-Forwarded-User --- apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: airflow-webserver namespace: airflow spec: entryPoints: - websecure routes: - match: Host(`airflow.zerohertz.xyz`) kind: Rule middlewares: - name: airflow-forward-auth-mw services: - name: airflow-webserver port: 8080 tls: certResolver: zerohertz-resolver --- apiVersion: v1 kind: Secret metadata: name: airflow-webserver-secret-key namespace: airflow type: Opaque data: webserver-secret-key: ${SECRET}

values.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 executor: KubernetesExecutor data: metadataConnection: user: ${USERNAME} pass: ${PASSWORD} webserverSecretKeySecretName: airflow-webserver-secret-key createUserJob: useHelmHooks: false applyCustomEnv: false migrateDatabaseJob: useHelmHooks: false applyCustomEnv: false webserver: defaultUser: username: ${USERNAME} email: ${EMAIL} password: ${PASSWORD} webserverConfig: APP_THEME = "simplex.css" postgresql: auth: postgresPassword: ${PASSWORD} username: ${USERNAME} password: ${PASSWORD} config: core: colored_console_log: true logging: colored_console_log: true dags: persistence: enabled: true size: 10Gi storageClassName: airflow-storage accessMode: ReadOnlyMany existingClaim: airflow-local-dags-pvc logs: persistence: enabled: true size: 10Gi storageClassName: airflow-storage existingClaim: airflow-local-logs-pvc

setup.yaml

setup.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: nextcloud-storage provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer --- apiVersion: v1 kind: PersistentVolume metadata: name: nextcloud-etc-pv spec: storageClassName: nextcloud-storage accessModes: - ReadWriteOnce capacity: storage: 10Gi hostPath: path: "" persistentVolumeReclaimPolicy: Retain --- apiVersion: v1 kind: PersistentVolume metadata: name: nextcloud-db-pv labels: app: nextcloud-db spec: storageClassName: nextcloud-storage accessModes: - ReadWriteOnce capacity: storage: 10Gi hostPath: path: "" persistentVolumeReclaimPolicy: Retain --- apiVersion: v1 kind: PersistentVolume metadata: name: nextcloud-data-pv spec: storageClassName: nextcloud-storage accessModes: - ReadWriteOnce capacity: storage: 600Gi hostPath: path: "" persistentVolumeReclaimPolicy: Retain --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nextcloud-db-pvc namespace: nextcloud spec: storageClassName: nextcloud-storage accessModes: - ReadWriteOnce resources: requests: storage: 10Gi selector: matchLabels: app: nextcloud-db --- apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nextcloud-data-pvc namespace: nextcloud spec: storageClassName: nextcloud-storage accessModes: - ReadWriteOnce resources: requests: storage: 600Gi --- apiVersion: traefik.io/v1alpha1 kind: IngressRoute metadata: name: nextcloud namespace: nextcloud spec: entryPoints: - websecure routes: - match: Host(`cloud.zerohertz.xyz`) kind: Rule services: - name: nextcloud port: 8080 tls: certResolver: zerohertz-resolver

values.yaml 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 nextcloud: host: cloud.zerohertz.xyz username: ${USERNAME} password: ${PASSWORD} internalDatabase: enabled: false externalDatabase: enabled: true type: postgresql host: ${HOST}:${PORT} user: ${USERNAME} password: ${PASSWORD} postgresql: enabled: true global: postgresql: auth: username: ${USERNAME} password: ${PASSWORD} primary: persistence: enabled: true redis: auth: password: ${USERNAME} global: storageClass: local-path persistence: enabled: true size: 10Gi nextcloudData: enabled: true size: 600Gi metrics: enabled: true

The polling URL does not start with HTTPS despite the login URL started with HTTPS. Login will not be possible because this might be a security issue. Please contact your administrator.

컴퓨터의 nextcloud app 연결 시 위와 같은 오류가 발생했을 땐 아래와 같이 overwrite.cli.url와 overwriteprotocol

config/config.php 1 2 3 4 5 6 <?php $CONFIG = array (... 'overwrite.cli.url' => 'https://cloud.zerohertz.xyz' , 'overwriteprotocol' => 'https' , );