Introduction

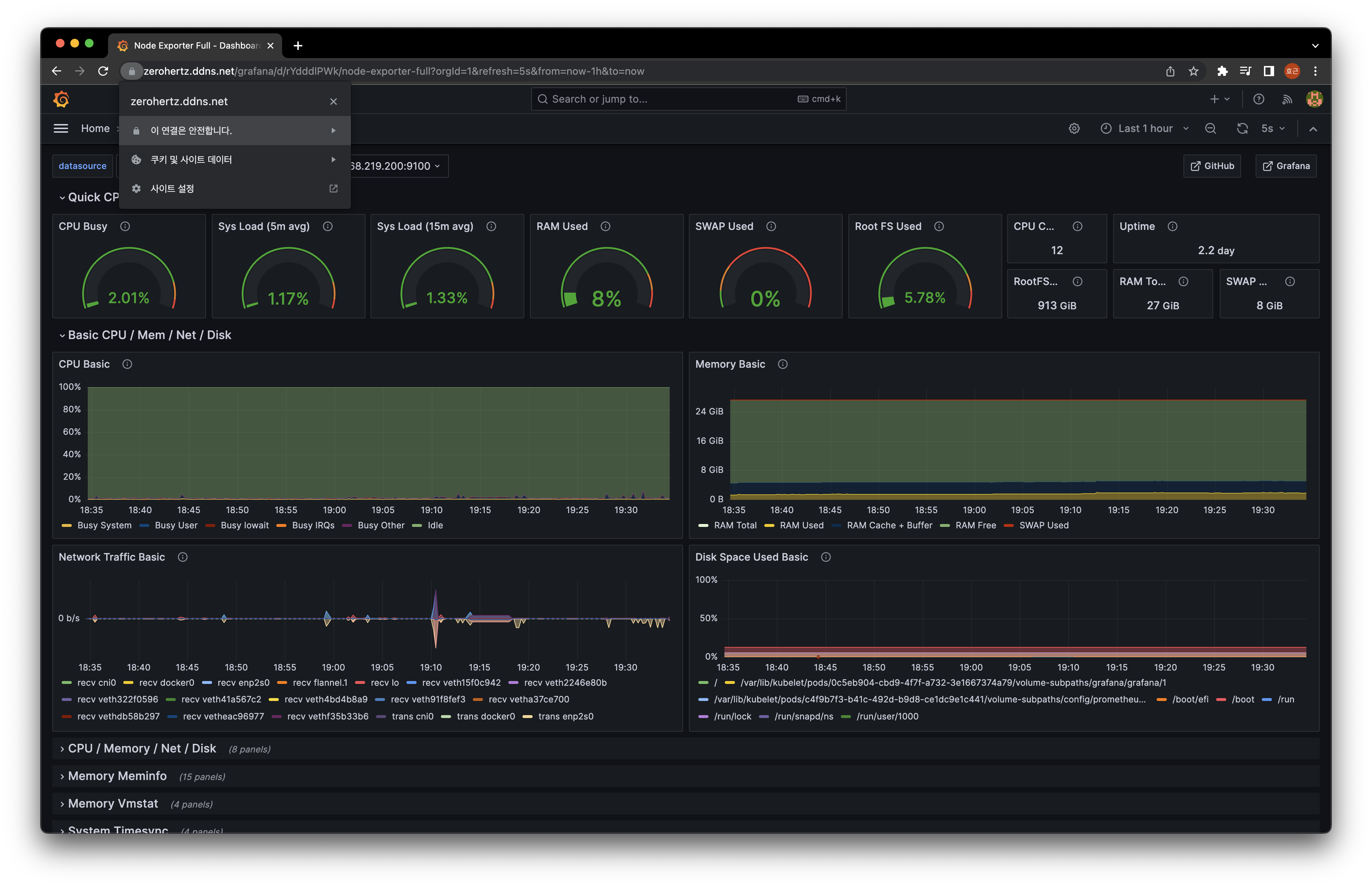

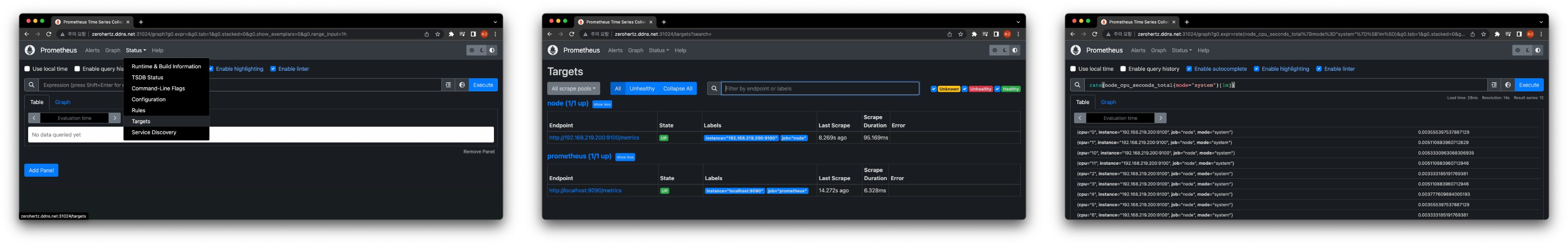

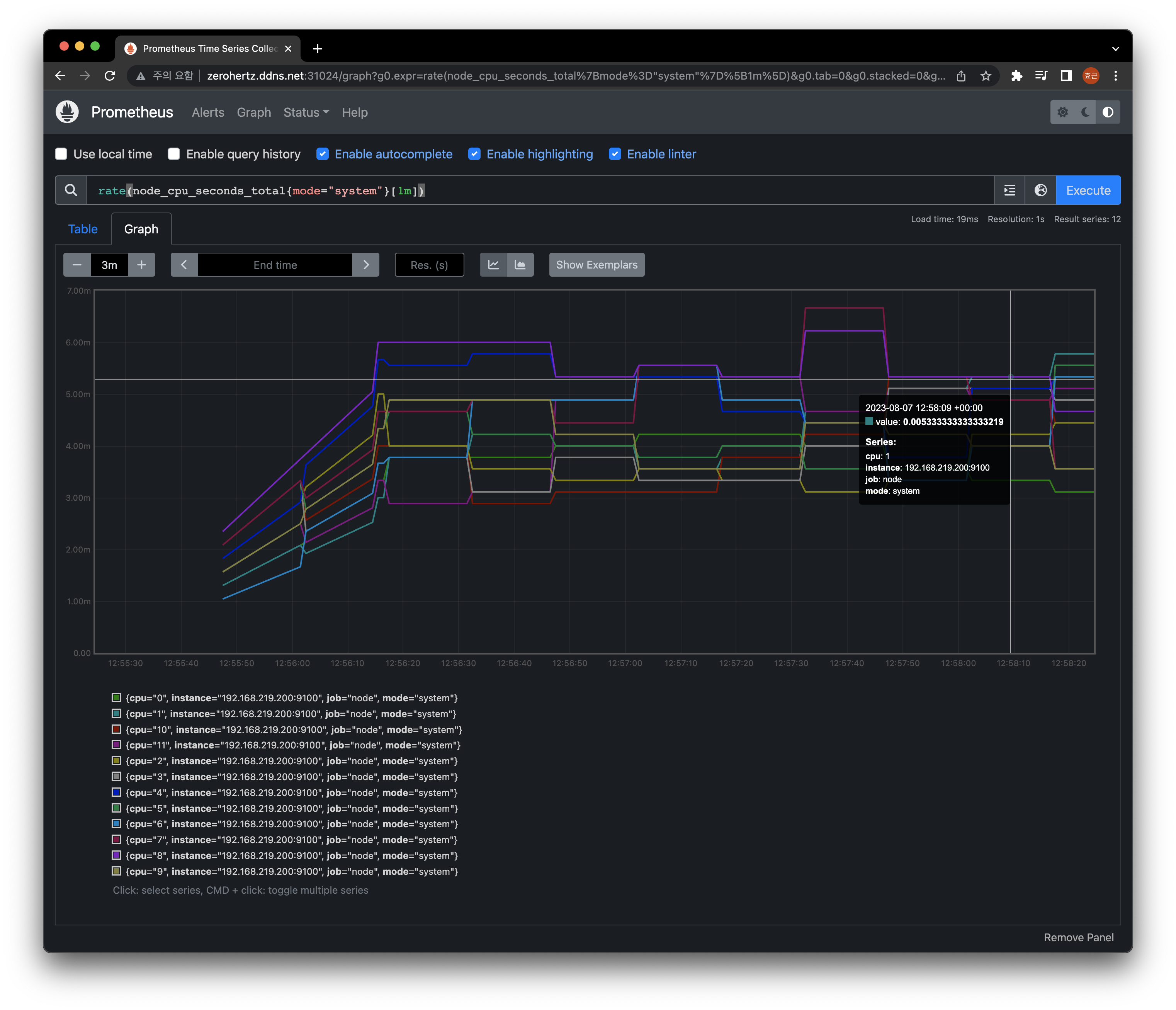

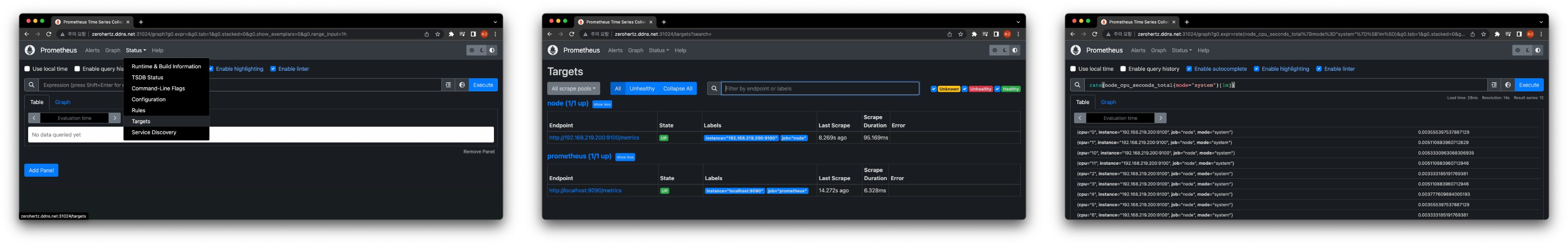

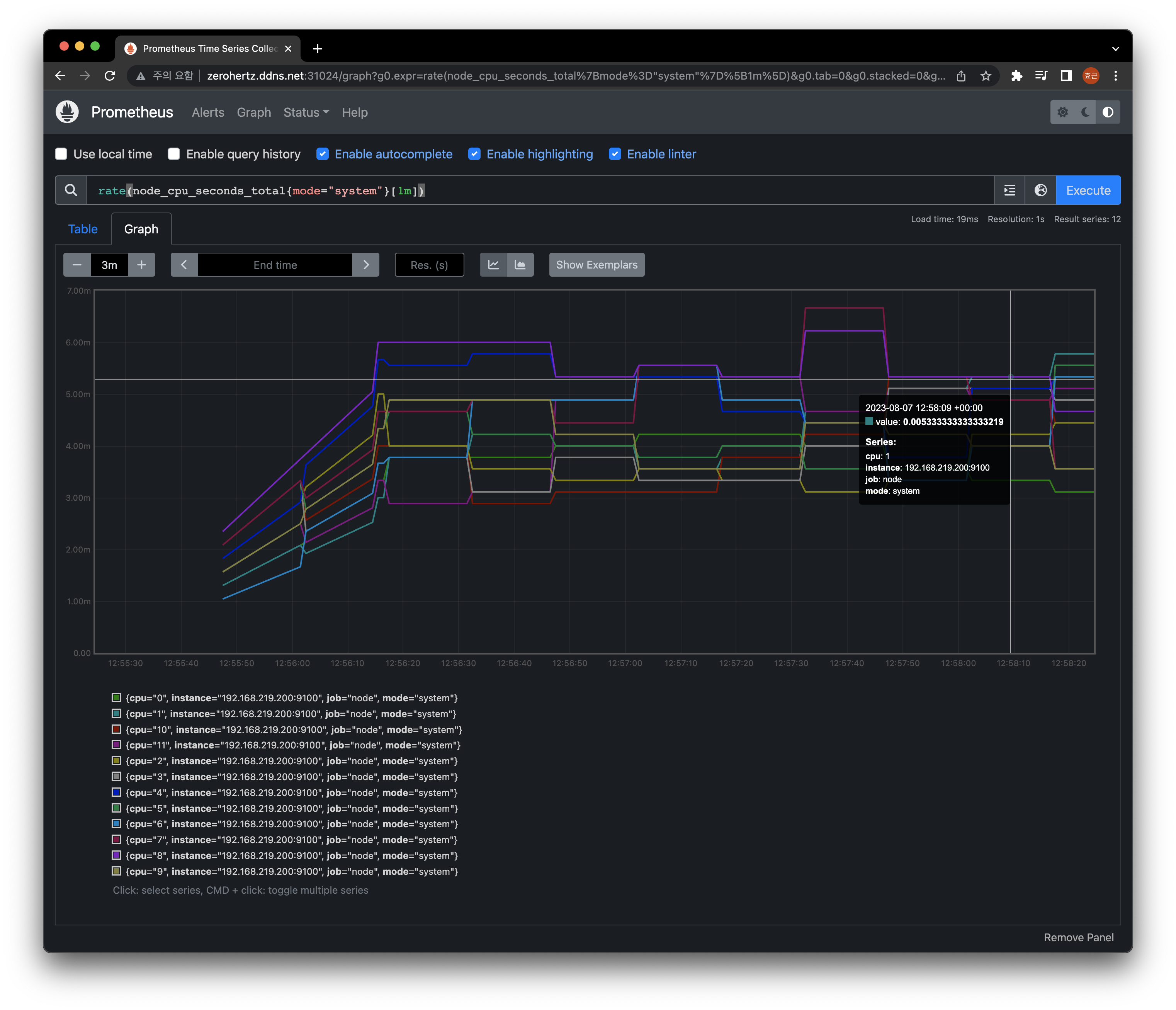

Node exporter의 system metric들을 Prometheus로 수집하고 Grafana로 시각화!

Node Exporter

1

2

3

| $ wget https://github.com/prometheus/node_exporter/releases/download/v1.6.1/node_exporter-1.6.1.linux-amd64.tar.gz

$ tar xvfz node_exporter-1.6.1.linux-amd64.tar.gz

$ sudo mv node_exporter-1.6.1.linux-amd64/node_exporter /usr/local/bin/node_exporter

|

/etc/systemd/system/node_exporter.service1

2

3

4

5

6

7

8

9

10

11

12

13

| [Unit]

Description=Node Exporter

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

ExecStart=/usr/local/bin/node_exporter

[Install]

WantedBy=multi-user.target

|

1

2

3

| $ sudo systemctl daemon-reload

$ sudo systemctl start node_exporter

$ sudo systemctl enable node_exporter

|

http://${CLUSTER_IP}:9100/metrics에서 아래와 같이 log들을 확인할 수 있다.

Prometheus

configmap/prometheus.yaml1

2

3

4

5

6

7

8

9

10

| global:

scrape_interval: 5s

evaluation_interval: 5s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'node'

static_configs:

- targets: ['${CLUSTER_IP}:9101']

|

1

2

| $ kubectl create namespace monitoring

$ kubectl create configmap prometheus --from-file=prometheus.yml=configmap/prometheus.yaml -n monitoring

|

prometheus.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

| apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: prometheus-storage

provisioner: kubernetes.io/no-provisioner

volumeBindingMode: WaitForFirstConsumer

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: prometheus-logs

labels:

type: prometheus-logs

spec:

storageClassName: prometheus-storage

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "${DIRECTORY}/logs"

persistentVolumeReclaimPolicy: Retain

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: prometheus

namespace: monitoring

spec:

storageClassName: prometheus-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

selector:

matchLabels:

type: prometheus-logs

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: monitoring

spec:

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

containers:

- name: prometheus

image: prom/prometheus

ports:

- containerPort: 9090

volumeMounts:

- name: data

mountPath: /prometheus

- name: config

mountPath: /etc/prometheus/prometheus.yml

subPath: prometheus.yml

volumes:

- name: data

persistentVolumeClaim:

claimName: prometheus

- name: config

configMap:

name: prometheus

---

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: monitoring

spec:

ports:

- port: 9090

selector:

app: prometheus

|

1

| $ kubectl apply -f prometheus.yaml

|

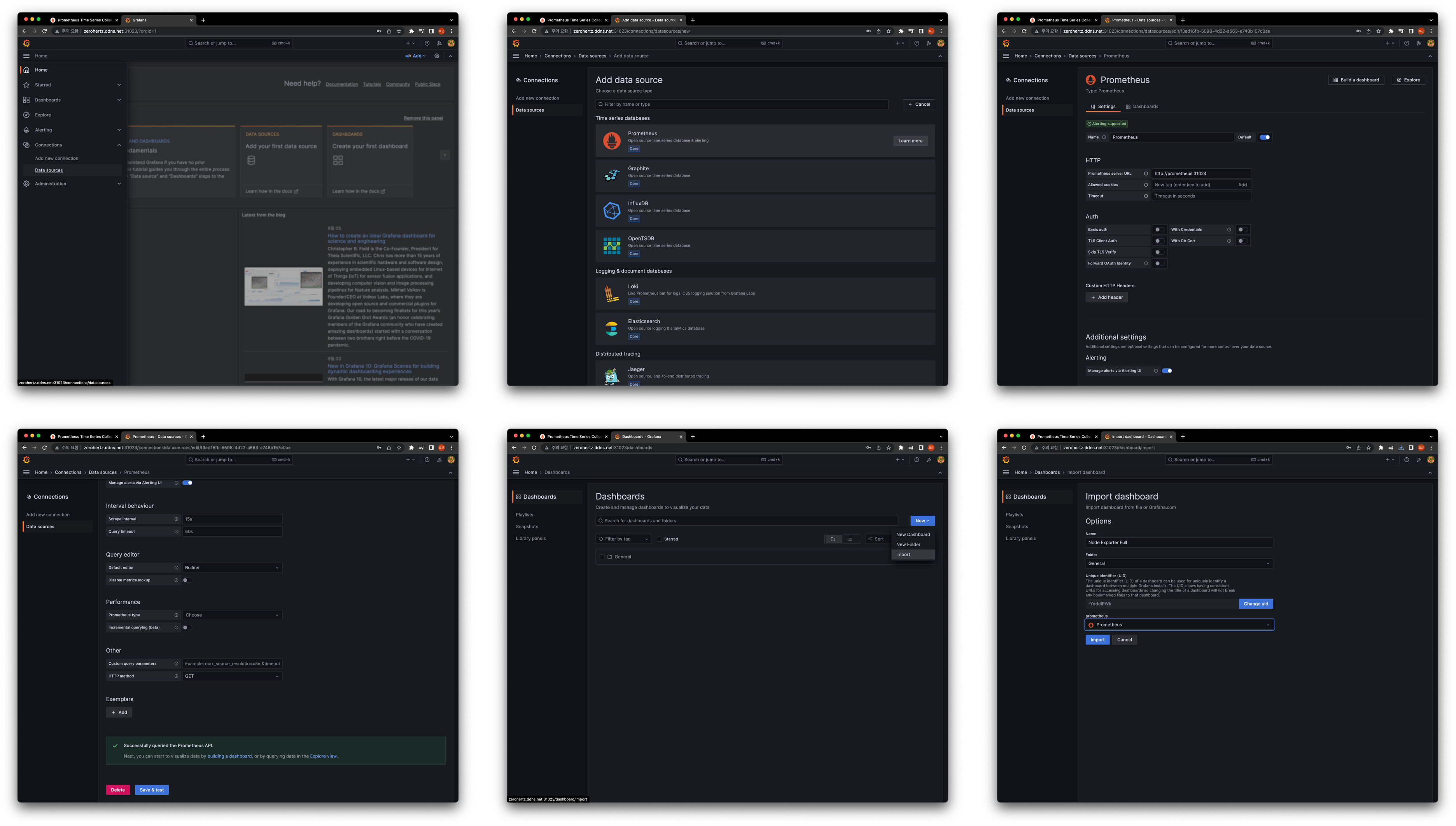

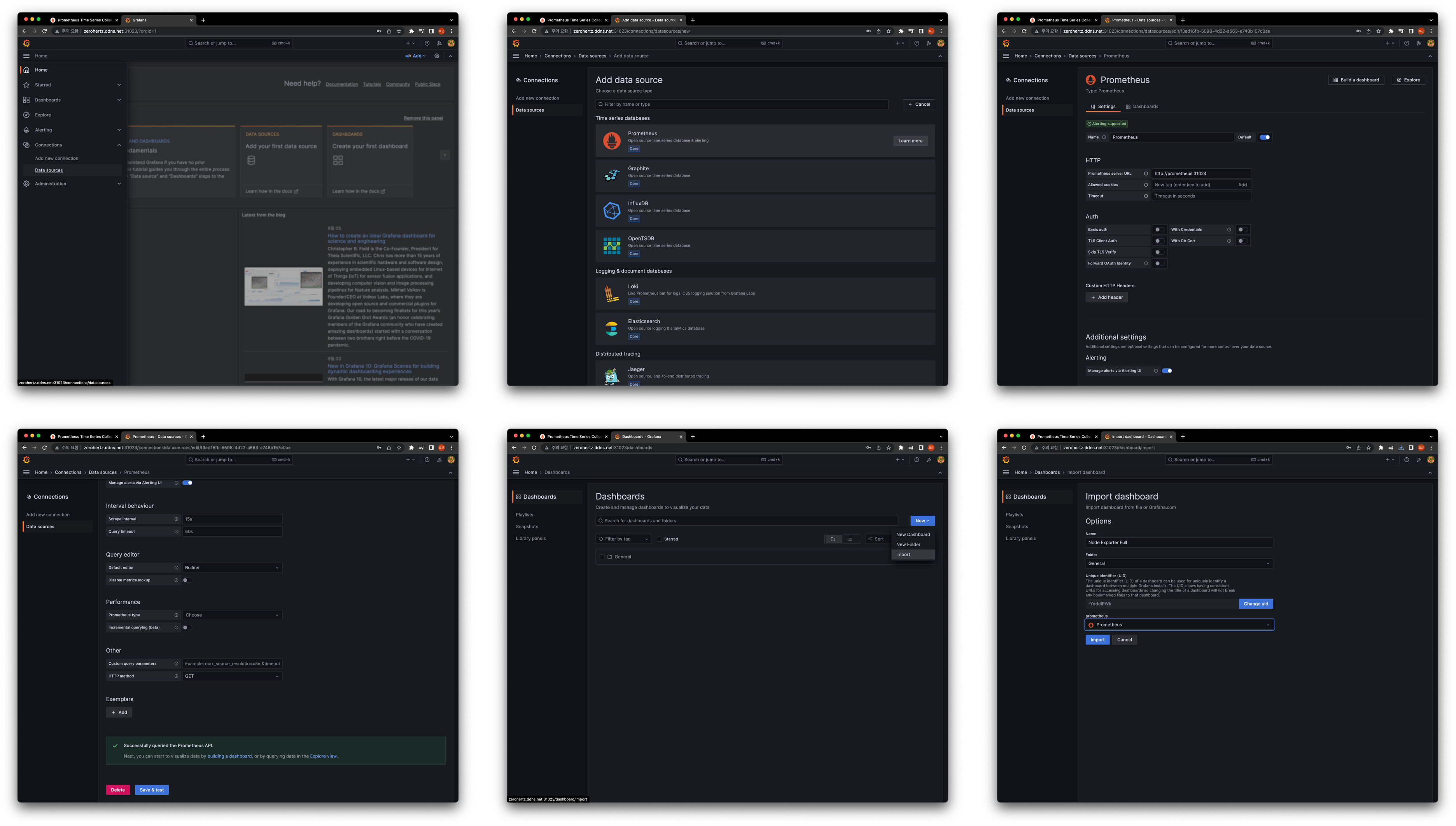

Grafana

grafana.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

| apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana

namespace: monitoring

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: monitoring

spec:

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- image: grafana/grafana

name: grafana

env:

- name: GF_SECURITY_ADMIN_USER

value: ${ID}

- name: GF_SECURITY_ADMIN_PASSWORD

value: ${PASSWORD}

ports:

- containerPort: 3000

volumeMounts:

- name: data

mountPath: /var/lib/grafana

volumes:

- name: data

persistentVolumeClaim:

claimName: grafana

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: monitoring

spec:

type: NodePort

ports:

- port: ${Grafana_Port}

targetPort: 3000

nodePort: ${Grafana_Port}

selector:

app: grafana

|

1

| kubectl apply -f grafana.yaml

|

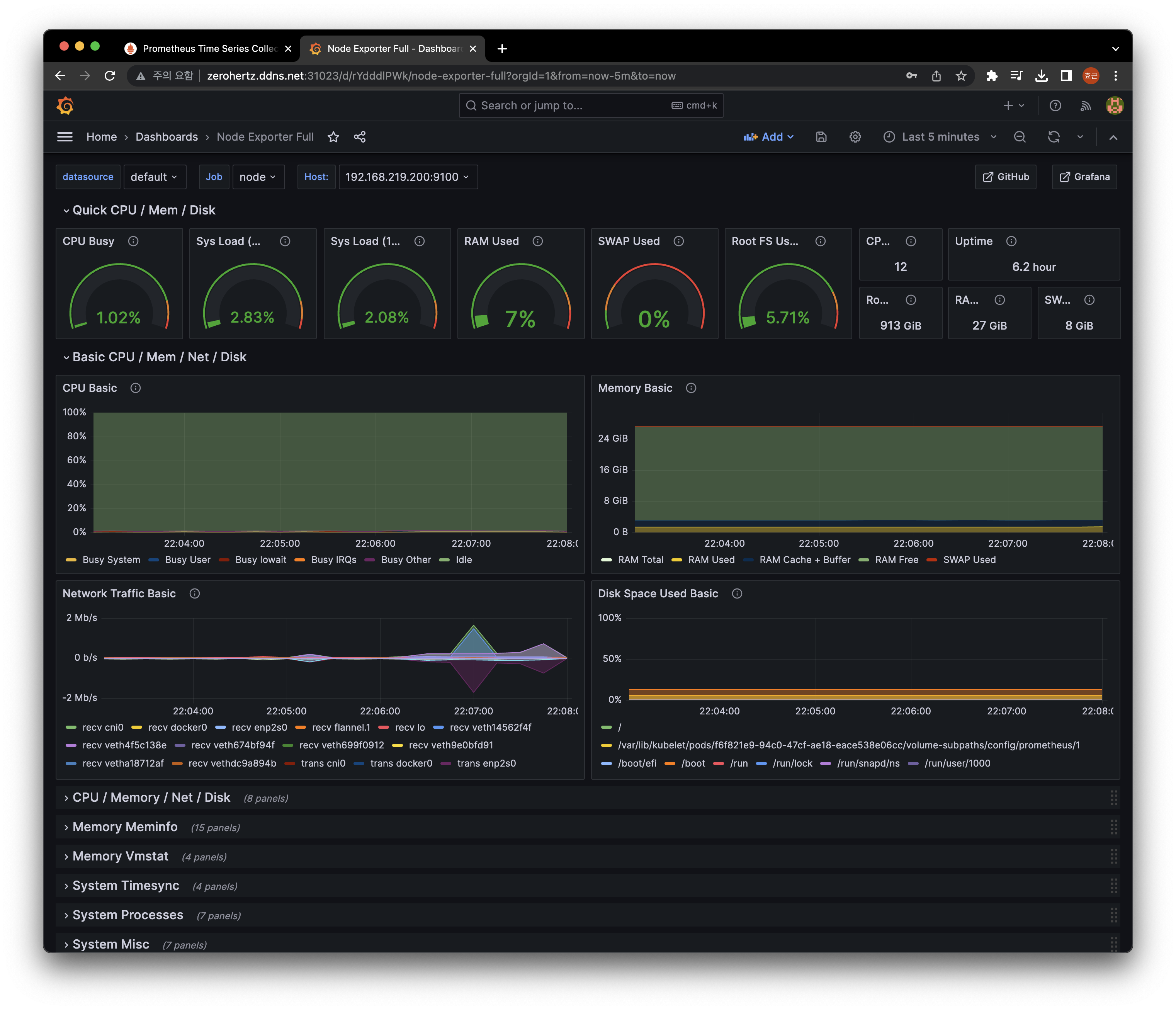

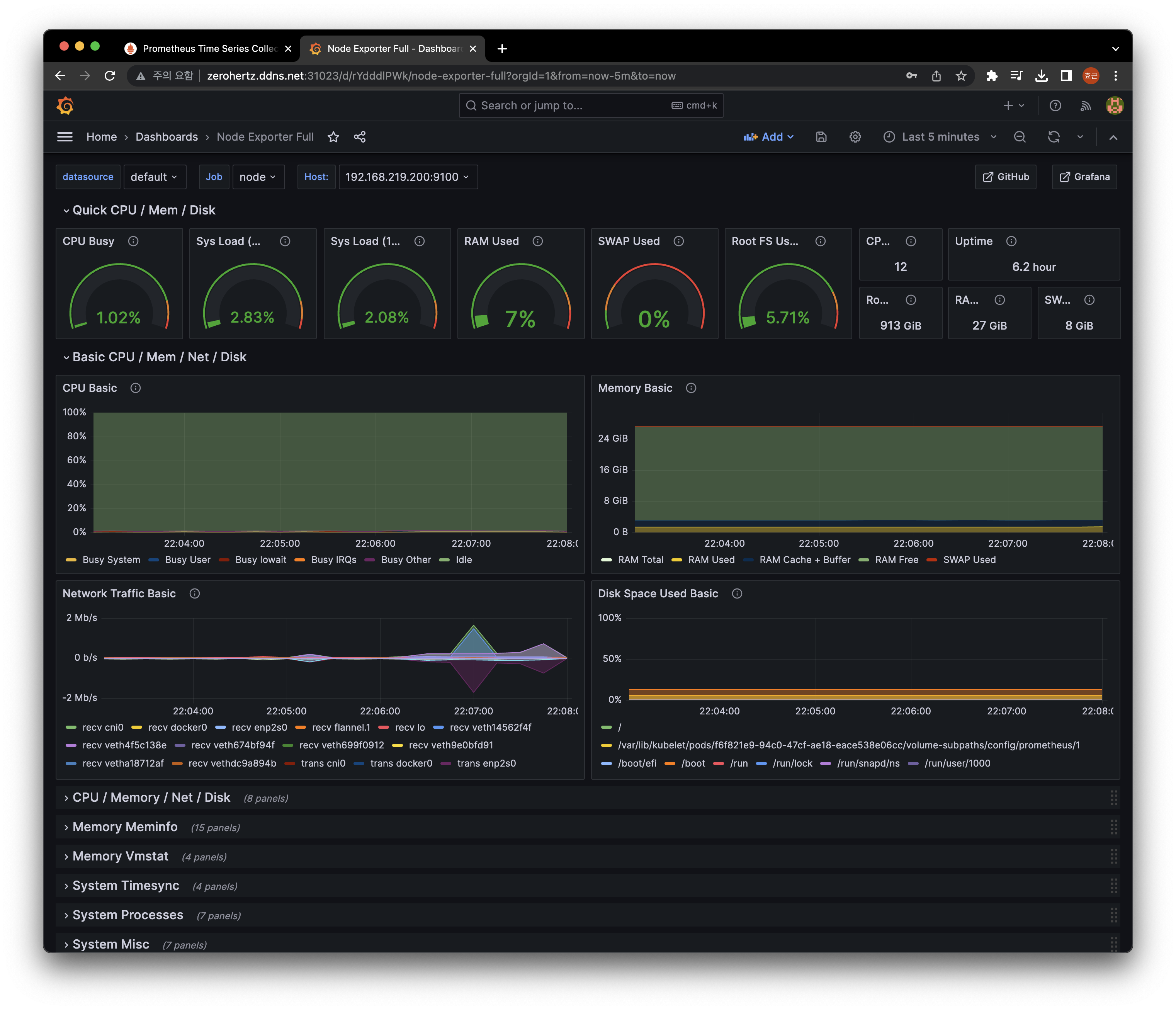

Node Exporter Full를 import하여 dashboard를 구성했다!

Ingress

Ingress 시도 및 실패,,, (traefik)

traefik-config.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| apiVersion: v1

kind: ConfigMap

metadata:

name: traefik-conf

namespace: kube-system

data:

traefik.toml: |

[entryPoints]

[entryPoints.web]

address = ":80"

[entryPoints.websecure]

address = ":443"

[entryPoints.grafana]

address = ":${Grafana_Port}"

[entryPoints.prometheus]

address = ":${Prometheus_Port}"

|

1

2

3

4

| $ kubectlapply -f traefik-deployment.yaml

configmap/traefik-conf created

$ kubectl edit deployment traefik -n kube-system

deployment.apps/traefik edited

|

traefik1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

| ...

spec:

...

template:

...

spec:

containers:

...

volumeMounts:

- mountPath: /data

name: data

- mountPath: /tmp

name: tmp

- name: config

mountPath: /etc/traefik

readOnly: true

...

volumes:

- emptyDir: {}

name: data

- emptyDir: {}

name: tmp

- name: config

configMap:

name: traefik-conf

|

1

2

3

| $ kubectl rollout restart deployment/traefik -n kube-system

deployment.apps/traefik restarted

$ kubectl edit svc traefik -n kube-system

|

traefik1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| spec:

...

ports:

...

- name: grafana

nodePort: ${Grafana_Port}

port: ${Grafana_Port}

protocol: TCP

targetPort: ${Grafana_Port}

- name: prometheus

nodePort: ${Prometheus_Port}

port: ${Prometheus_Port}

protocol: TCP

targetPort: ${Prometheus_Port}

...

|

1

2

3

4

5

| kubectlget svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 11m

metrics-server ClusterIP 10.43.195.230 <none> 443/TCP 11m

traefik LoadBalancer 10.43.66.36 ${IP} 80:32633/TCP,443:31858/TCP,${Grafana_Port}:${Grafana_Port}/TCP,${Prometheus_Port}:${Prometheus_Port}/TCP 11m

|

정신 못차리고 HTTPS를 도입해보려고 Ingress를 시도하려다 또 실패 ~

1

2

3

4

5

| $ openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=${HOST}"

$ kubectl create secret tls grafana-tls --key tls.key --cert tls.crt -n monitoring

$ kubectl get secret -n monitoring

NAME TYPE DATA AGE

grafana-tls kubernetes.io/tls 2 36s

|

grafana.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: grafana-ingress

namespace: monitoring

spec:

rules:

- host: ${HOST}

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: grafana

port:

number: ${Grafana_Port}

tls:

- hosts:

- ${HOST}

secretName: grafana-tls

|

1

| $ kubectl apply -f grafana.yaml

|

traefik.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: grafana-ingressroute

namespace: monitoring

spec:

entryPoints:

- websecure

routes:

- match: Host(`${HOST}`)

kind: Rule

services:

- name: grafana

port: ${Grafana_Port}

tls:

secretName: grafana-tls

|

1

2

| $ kubectl apply -f traefik.yaml

$ kubectl get ingressroute -n monitoring

|

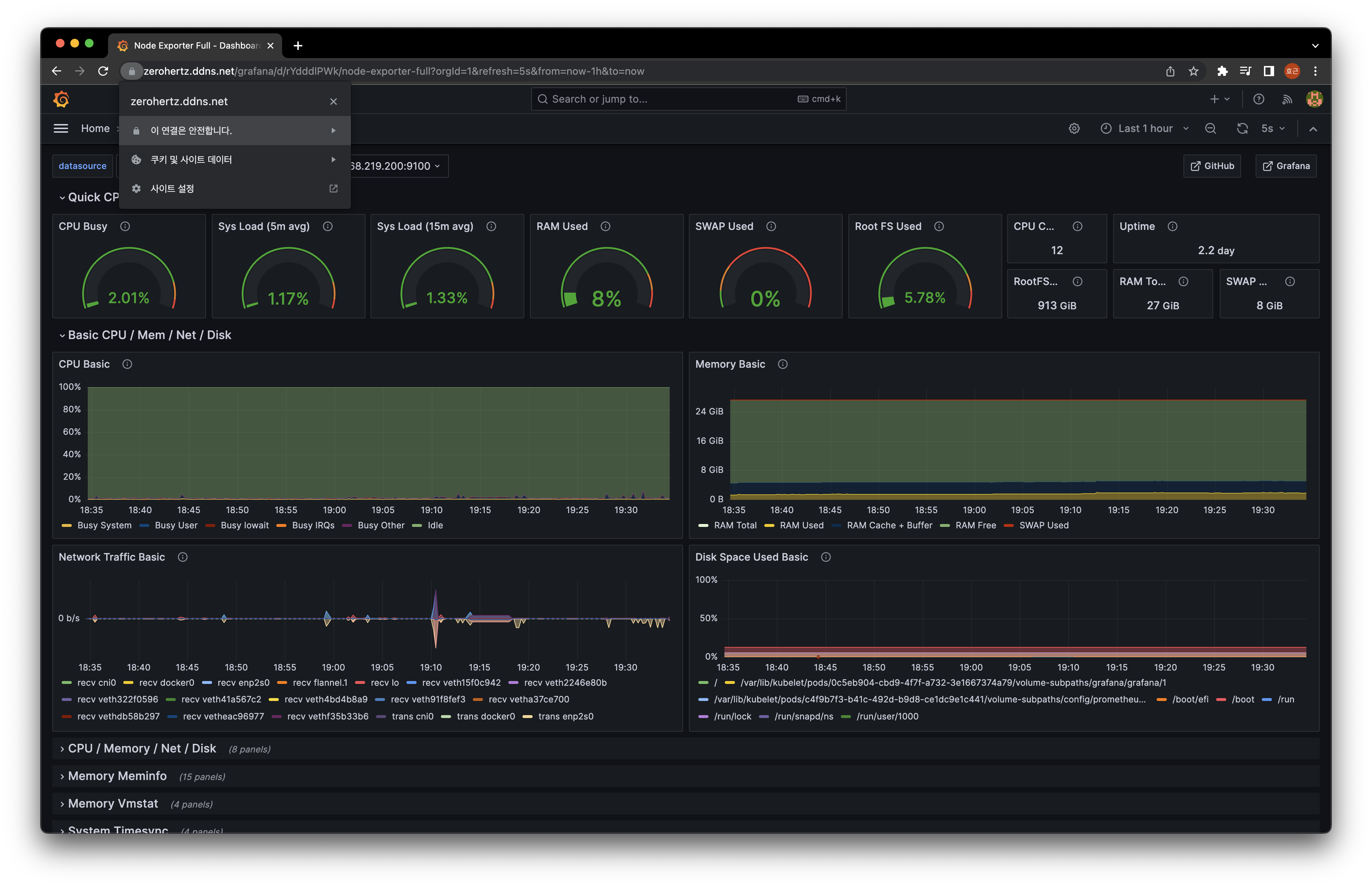

삽질을 통해 결국 해냈다!

우선 이 글에서 확인할 수 있듯 Traefik으로 HTTPS protocol을 하는 법을 익혔고 거기에 path를 추가하여 성공했다!

Path를 설정하고 HTTPS로 monitoring service를 배포하는 방법은 아래와 같다.

우선 path를 Grafana service 내부에서도 인지해야하기 때문에 아래와 같은 설정 파일을 만들고 ConfigMap을 생성한다.

grafana.ini1

2

| [server]

root_url = https://${DDNS}/grafana

|

1

| $ kubectl create configmap grafana --from-file=grafana.ini=configmap/grafana.ini -n monitoring

|

위에서 선언한 ConfigMap을 Deployment에 알려주고, path를 Middleware와 IngressRoute에 설정하면 끝이다.

grafana.yaml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

| ...

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: monitoring

spec:

...

template:

...

spec:

containers:

...

volumeMounts:

...

- name: grafana

mountPath: /etc/grafana/grafana.ini

subPath: grafana.ini

volumes:

...

- name: grafana

configMap:

name: grafana

---

...

---

apiVersion: traefik.containo.us/v1alpha1

kind: Middleware

metadata:

name: grafana

namespace: monitoring

spec:

stripPrefix:

prefixes:

- "/grafana"

---

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: grafana

namespace: monitoring

spec:

entryPoints:

- websecure

routes:

- match: Host(`${DDNS}`) && PathPrefix(`/grafana`)

kind: Rule

middlewares:

- name: grafana

services:

- name: grafana

port: 80

tls:

certResolver: myresolver

|