CKAD (Certified Kubernetes Application Developer)

Introduction

CKAD: Kubernetes 환경에서 cloud-native application을 효과적으로 설계, 구축, 배포 및 구성할 수 있는 역량을 검증하는 CNCF의 공식 자격증

CKAD curriculum에서 CKAD가 포함하는 내용들을 아래와 같이 확인할 수 있다.

v1.34 기준| Domain | Weight | Key Points |

|---|---|---|

| Application Design and Build | 20% | ✅ Define, build and modify container images ✅ Choose and use the right workload resource (Deployment, DaemonSet, CronJob, etc.) ✅ Understand multi-container Pod design patterns (e.g. sidecar, init and others) ✅ Utilize persistent and ephemeral volumes |

| Application Deployment | 20% | ✅ Use Kubernetes primitives to implement common deployment strategies (e.g. blue/green or canary) ✅ Understand Deployments and how to perform rolling updates ✅ Use the Helm package manager to deploy existing packages ✅ Kustomize |

| Application Observability and Maintenance |

15% | ✅ Understand API depreciations ✅ Implement probes and health checks ✅ Use built-in CLI tools to monitor Kubernetes applications ✅ Utilize container logs ✅ Debugging in Kubernetes |

| Application Environment, Configuration and Security |

25% | ✅ Discover and use resources that extend Kubernetes (CRD, Operators) ✅ Understand authentication, authorization and admission control ✅ Understand requests, limits, quotas ✅ Define resource requirements ✅ Understand ConfigMaps ✅ Create & consume Secrets ✅ Understand ServiceAccounts ✅ Understand Application Security (SecurityContexts, Capabilities, etc.) |

| Services and Networking | 20% | ✅ Demonstrate basic understanding of NetworkPolicies ✅ Provide and troubleshoot access to applications via services ✅ Use Ingress rules to expose applications |

- 가격: \$395 (현재는 \$445)

- 시간: 2시간

- 문제: 15-20문제

- 장소: 사방이 막힌 조용한 장소

- 준비물: 신분증 (영문 이름 필수)

공식 사이트에서 결제하여 CKAD 응시를 신청할 수 있다.

위와 같이 Linux Foundation에서 제공하는 coupon을 통해 기존 \$395의 가격을 할인 받을 수 있다. (필자는 50%의 할인을 받아 \$197.5에 결제했다.)

결제를 마쳤다면 1년 내로 아래와 같이 시험을 예약해야 한다.

CKA와 동일하게, 시험 응시 시 환경에서는 현재 존재하지 않지만 multi-cluster 환경에서 시험을 응시하고, 여기에서 시험 응시 시 사용할 기기의 검증을 수행할 수 있다.

또한 CKAD도 Udemy에서 Mumshad님이 진행하신 강의가 매우 유명하기 때문에 해당 강의를 수강했다.

해당 강의를 수강하면 KodeKloud를 통해 실제 시험과 유사한 조건 속에서 연습할 수 있다.

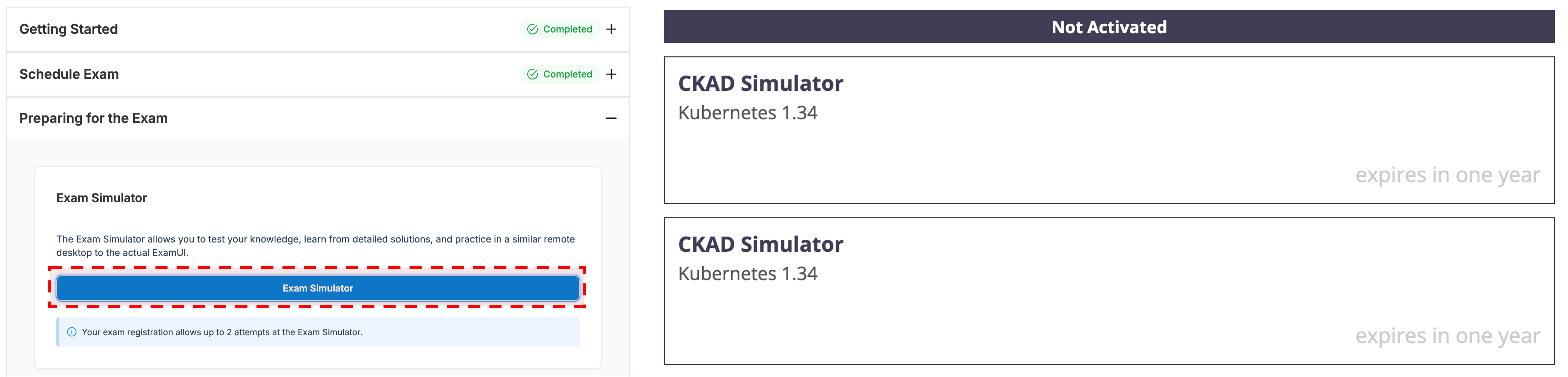

마지막으로 CKA 시험의 결제를 마치면 아래와 같이 killer.sh의 문제를 2회 풀 수 있는 권한을 주기 때문에 복기를 위해 이를 풀었다.

Theoretical Backgrounds

Recap Core Concepts

Pods

1 | kubectl get po | wc -l |

ReplicaSets

1 | kubectl get po | wc -l |

Deployments

1 | kubectl get po | wc -l |

Namespaces

1 | kubectl get ns | wc -l |

Imperative Commands

1 | kubectl run nginx-pod --image nginx:alpine |

Configuration

Commands and Arguments

1 | kubectl get po | wc -l |

ConfigMaps

1 | kubectl get po | wc -l |

Secrets

1 | kubectl get secret | wc -l |

Security Contexts

1 | kubectl exec -it ubuntu-sleeper -- whoami |

Resource Limits

1 | kubectl describe po rabbit | grep cpu: -B1 |

Service Account

1 | kubectl get sa | wc -l |

Taints and Tolerations

1 | kubectl get node | wc -l |

Node Affinity

1 | kubectl describe node node01 | grep -i label -A5 |

Multi-Container PODs

Multi-Container PODs

1 | kubectl get po red |

Readiness Probes

1 | ./curl-test.sh |

Logging

1 | kubectl logs webapp-1 | grep USER5 |

Monitoring

1 | kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml |

Init Containers

1 | kubectl get po -oyaml | grep initContainer -B30 | grep name |

POD Design

Labels and Selectors

1 | kubectl get po -l env=dev | wc -l |

Rolling Updates & Rollbacks

1 | ./curl-test.sh |

Jobs and CronJobs

1 | kubectl apply -f throw-dice-pod.yaml |

Services & Networking

Kubernetes Services

1 | kubectl get svc |

Network Policies

1 | kubectl get networkpolicy |

Ingress Networking - 1

1 | kubectl get po -A | grep ingress |

Ingress Networking - 2

1 | vim tmp.yaml |

State Persistence

Persistent Volumes

1 | kubectl get po webapp -oyaml | sed '/^status:/,$d' > tmp.yaml |

Storage Class

1 | kubectl get sc | wc -l |

Updates for Sep 2021 Changes

Practice test Docker Images

1 | docker images | wc -l |

Practice Test KubeConfig

1 | kubectl config get-clusters |

Practice Test Role Based Access Controls

1 | kubectl get -n kube-system po kube-apiserver-controlplane -oyaml | grep -i auth |

Practice Test Cluster Roles

1 | kubectl get clusterrole | wc -l |

Labs - Admission Controllers

1 | sed -i 's/true/false/' /etc/kubernetes/imgvalidation/imagepolicy-conf.yaml |

Labs - Validating and Mutating Admission Controllers

1 | kubectl create ns webhook-demo |

Lab - API Versions/Deprecations

1 | kubectl api-resources | grep authorization |

Practice Test - Custom Resource Definition

1 | cat crd.yaml | sed '5s/name:/& internals.datasets.kodekloud.com/' | sed 's/group:/& datasets.kodekloud.com/' | sed 's/scope:/& Namespaced/' | sed 's/name: v2/name: v1/' | sed 's/served: false/served: true/' | sed 's/plural: internal/&s/' | kubectl apply -f - |

Practice Test - Deployment strategies

1 | kubectl describe deploy frontend | grep -i strategy |

Labs - Install Helm

1 | cat /etc/os-release | grep NAME |

Labs - Helm Concepts

1 | helm repo add bitnami https://charts.bitnami.com/bitnami |

Lab - Managing Directories 🆕

1 | apiVersion: kustomize.config.k8s.io/v1beta1 |

Lab - Transformers 🆕

1 | cat code/k8s/kustomization.yaml | grep -i label -A1 |

Lab - Patches 🆕

1 | cat code/k8s/kustomization.yaml | grep nginx-deploy -A4 |

Lab - Overlay 🆕

1 | kubectl kustomize code/k8s/overlays/prod | grep api-deploy -A20 | grep image |

Lab - Components 🆕

1 | cat code/project_mercury/overlays/community/kustomization.yaml |

Lightning Labs

Lab: Lightning Lab - 1

1 | kubectl run logger --image nginx:alpine --dry-run=client -oyaml >> 1.yaml |

Lab: Lightning Lab - 2

1 | kubectl get po -n dev1401 nginx1401 -oyaml | sed '/status:/,$d' | sed 's/8080/9080/g' | sed '/timeoutSeconds/a\ livenessProbe:\n exec:\n command: ["ls", "/var/www/html/file_check"]\n initialDelaySeconds: 10\n periodSeconds: 60' | kubectl replace --force -f - |

Mock Exams

Mock Exam - 1

1 | kubectl run nginx-448839 --image nginx:alpine |

Mock Exam - 2

1 | kubectl create deploy my-webapp --image nginx --replicas 2 --dry-run=client -oyaml | sed '5a\ tier: frontend' | kubectl apply -f - |

Retrospective

시험에 접속하면 본인 확인 및 부정행위 방지를 위한 몇가지 절차를 수행한다.

이때 나는 고양이를 키워 문제가 될 수 있는 상황이였고 시험을 보며 고양이 눈치도 살폈다… (🐈⬛)

본 시험에서는 17문제가 출제되었고 2시간 중 1시간 30분 정도에 다 풀었어서 그대로 제출했었다.

오히려 CKA에서는 ETCD 백업이나 클러스터 전반적인 장애 복구를 위주로 했다보니 context를 변경하며 실수하지 않도록 유의했지만, CKAD에서는 ssh로 각 서버 접속 후 클러스터를 조작해서 오히려 훨씬 쉬웠다.

그 외에는 ResourceQuota와 LimitRange가 KodeKloud 예상 문제에 없었는데 2문제 출제되어 좀 당황했지만 공식 문서를 읽으면서 차분히 수행했기에 좋은 결과가 있었던 것 같다.

Kubestronauts를 하고는 싶지만,,, 자격증 가격 및 환율이 너무 올라서 미련 없이 Kubernetes 자격증 시리즈는 끝낼 수 있을 것 같다…