Paper Review: PagedAttention

Efficient Memory Management for Large Language Model Serving with PagedAttention

Introduction

GPT $_[$$_{5}$$_,$$_{37}$$_]$, PaLM $_[$$_{9}$$_]$과 같은 large language models (LLMs)의 등장으로 programming assistant $_[$$_{6}$$_,$$_{18}$$_]$와 범용적인 chatbot $_[$$_{19}$$_,$$_{35}$$_]$과 같은 새로운 applications로 인해 우리의 일과 일상에 큰 영향을 미치기 시작했다.

다양한 cloud 회사들 $_[$$_{34}$$_,$$_{44}$$_]$이 hosted services로 이러한 applications를 제공하기 위해 경쟁 중이지만, 매우 비싸고 GPU와 같은 hardware 가속기가 매우 많이 필요하다.

최근 추정에 따르면 LLM 요청 처리 비용은 기존 keyword query보다 10$\times$ 더 비쌀 수 있다 $_[$$_{43}$$_]$.

따라서 이러한 높은 비용을 고려했을 때, LLM serving system의 throughput을 높이고 요청당 비용을 줄이는 것이 더욱 중요해지고 있다.

LLM의 core에는 autoregressive Transformer model $_[$$_{53}$$_]$이 있고, 이 model은 입력 (prompt)과 지금까지 생성한 출력 tokens의 이전 sequence를 기반으로 한 번에 하나씩 단어들 (tokens)을 생성한다.

매 요청마다 이러한 값비싼 process는 termination token을 출력하기 전까지 반복되며 이러한 순차적 생성 process는 workload를 memory에 집중시켜 GPU의 연산 능력을 충분히 활용하지 못하고 throughput을 제한한다.

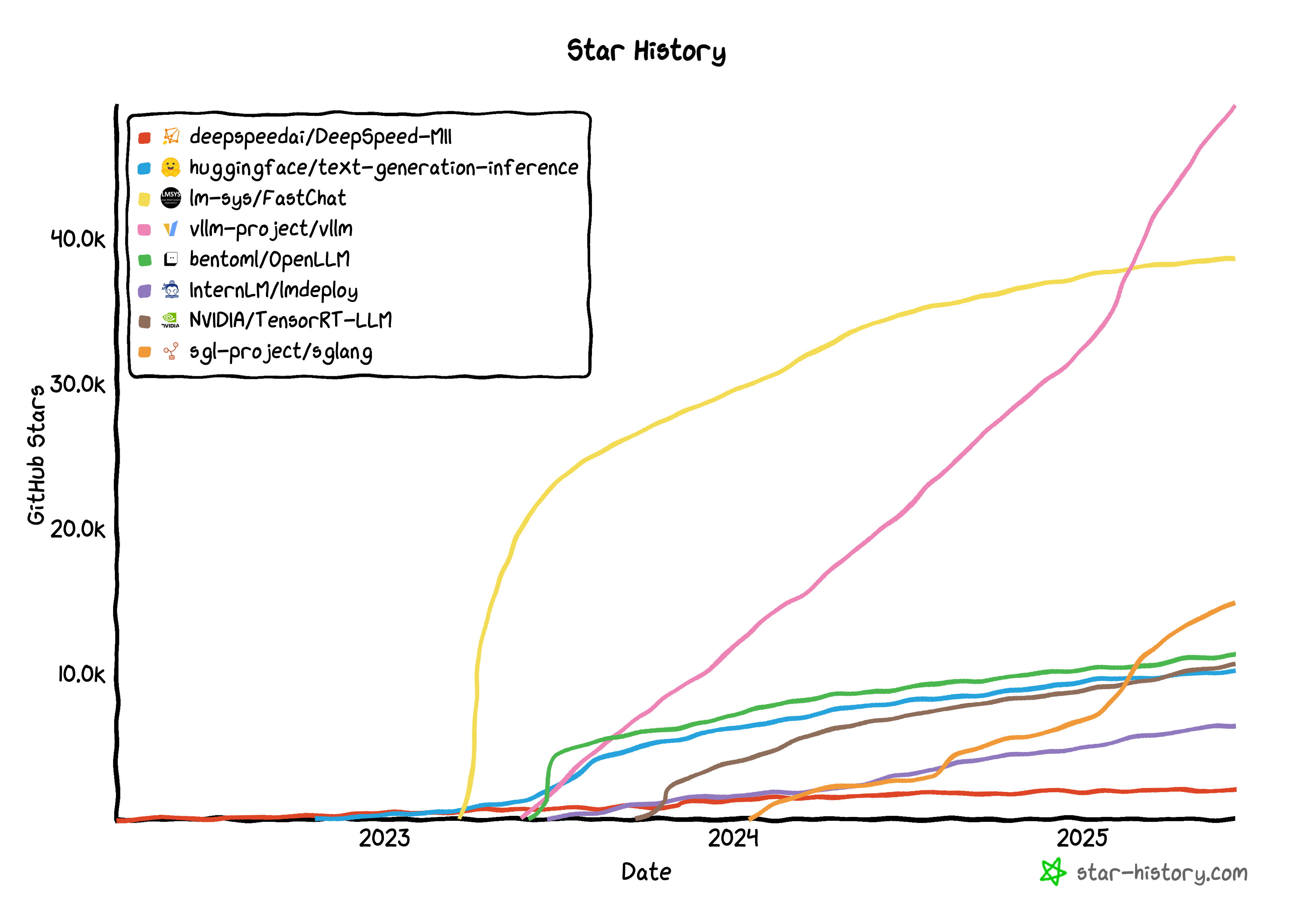

pie "Parameters (26GB, 65%)" : 65 "KV Cache (>30%)" : 30 "Others" : 5Figure 1. Memory layout when serving an LLM with 13B parameters on NVIDIA A100. The parameters persist in GPU memory throughout serving. The memory for the KV cache is (de)allocated per serving request

Throughput은 다수의 요청을 batching하여 개산할 수 있지만 batch 내에서 다수의 요청을 처리하려면 각 요청을 위한 memory가 효율적으로 관리되어야한다.

예를 들어, Fig. 1은 40GB RAM을 가지는 NVIDIA A100 GPU에서 130억 (13B) parameter

LLM의 memory 분포를 보여준다.

약 65%의 memory가 serving 중 고정적이게 model 가중치로 할당되고, 약 30%의 memory가 요청에 대한 동적인 상태 (dynamic states)를 저장하기 위해 사용될 것이다.

Transformers에서 이러한 상태는 KV cache $_[$$_{41}$$_]$라고 불리는 attention mechanism과 관련된 key와 value tensors로 구성되며, 이는 이전 tokens의 context를 나타내어 순서대로 새 출력 token을 생성한다.

그리고 남아있는 나머지 memory는 LLM을 평가할 때 생성되는 일시적인 tensor인 activation을 포함한 다른 data에서 사용된다.

Model의 가중치는 고정되어있고, activations는 GPU memory의 작은 부분만 차지하므로 KV cache가 관리되는 방식은 최대 batch size를 결정하는데 중요하며 비효율적으로 관리될 경우 KV cache memory는 batch size를 크게 제한하고, 결과적으로 LLM의 throughput을 제한할 수 있다.